A commonly held email A/B split test best practice is to only test one change at a time.

A commonly held email A/B split test best practice is to only test one change at a time.

The logic is simple. If you make multiple changes at once in your email split test treatments you won’t know which change was important to getting an uplift.

Whilst there is truth in this, sometimes you’re simply starting from the wrong place with your email split tests and making just one change at a time may stop you finding the best performing email.

Test your way to the top

Let me explain with the help of a mountain why testing just one change is not a split test best practice.

Imagine you are visiting a range of mountains and want to reach the highest point.

The logic is easy, search for a route whereby you always move upwards. Each step is a test and the winning test is when the step takes you higher.

We’ll find the highest point (optimal email) by successively testing until reaching the summit.

Now imagine when you get to the top you finally notice that there are several mountains and the highest mountain was not the one you started climbing. You reached the highest point on the wrong mountain.

If only you’d visited a few mountains before deciding which to climb.

You optimised performance, but optimised a sub-optimal concept.

The same is true of email.

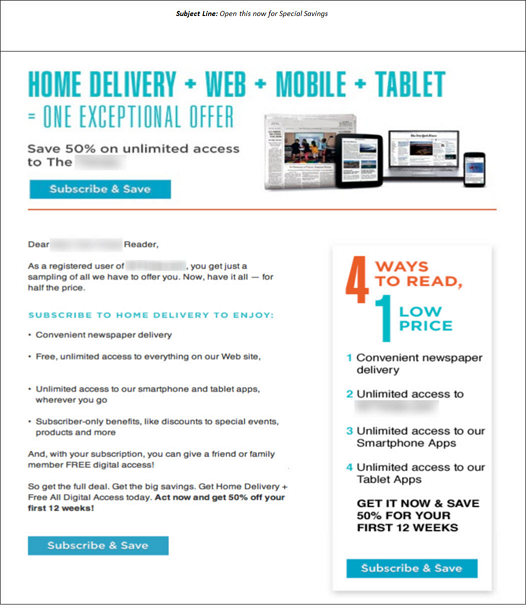

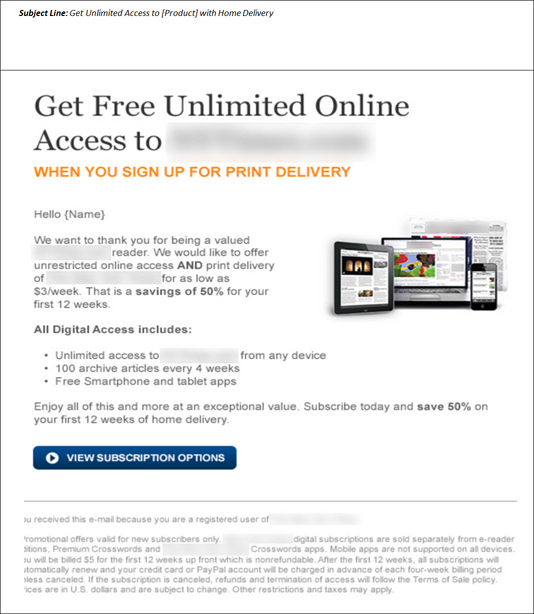

A good example, with the winner getting a conversion rate increase of 181% is a design test from Meclabs. Look at the large number of differences between the two designs.

Control design

Treatment design

There are numerous changes between the A/B split test control and test treatment.

- Removal of graphical header, replaced with a simple text headline

- Subhead line adding explaining the action needed; “when you sign up for print delivery”

- Key benefit changed from 50% saving to free unlimited online access. It’s still the same overall offer, just a different emphasis

- Removal of right column summary with 4 benefits

- Call to action to a smaller step of ‘view options’ rather than ‘subscribe’

- Different font colors

- Subject line change

- Body copy made more human, less corporate in style

Imagine if changes had been made one at a time. Its hard to see how to get from the control to the winning treatment one change at time.

Individually made changes might even have been rejected. Had the winning email subject line, “Get unlimited access to [product] with home delivery” been split tested using the control email design, it would likely have failed because it’s not consistent with the control email headline and body.

The uplift comes from many changes working together, from the overall concept change.

What about multivariant split tests?

Multivariant split tests allow several changes to be made at once and all combinations to tested. This gives some of the benefits of A/B split testing as the impact of each individual change is measure.

Though in most cases the tests are still constrained by the original concept and it’s more like A/B split testing on steroids than testing new concepts.

Email split test best practice – both revolution and evolution

This doesn’t make split testing only one change wrong. Just don’t be a slave to a so-called email best practice.

Changing lots of things is like a revolution whereas changing one thing is an evolution. Both approaches to improving email performance are useful.

- Evolutionary is making small changes and testing one thing at a time to evolve an email and create a better version of the same concept

- Revolutionary makes many changes and may change the whole basis of the concept.

Being a revolutionary split tester can save time over making one change at a time. Albeit with less clarity.

There is a trade-off between determining the power of individual elements vs the time taking to make your boss happy and increase revenue.

Ultimately email split testing is limited by the effort needed to test and the amount of data available for split test sample cells.

Next time you’re creating an email test, don’t be afraid to re-think from a blank sheet.

But remember, the one email split test best practice not to ignore is to check any test results for statistical significance using a calculator.